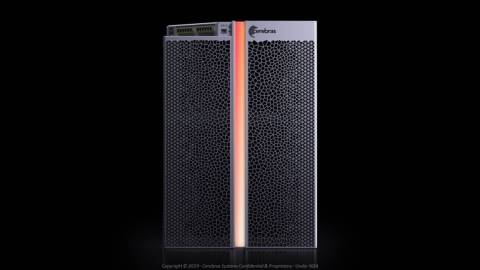

Cerebras Systems, a company dedicated to accelerating Artificial Intelligence (AI) compute, today unveiled its CS-1 system, the world’s fastest AI computer. With every component optimized for AI work, the CS-1 delivers more compute performance at less space and less power than any other system.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20191119005046/en/

Cerebras Systems Unveils CS-1, the Industry’s Fastest Artificial Intelligence Computer (Photo: Business Wire)

In August, Cerebras delivered the Wafer Scale Engine (WSE), the only trillion transistor wafer scale processor in existence. The Cerebras WSE is 56.7 times larger and contains 78 times more compute cores than the largest GPU, setting a new bar for AI processors. The CS-1 system design and Cerebras software platform combine to extract every ounce of processing power from the 400,000 compute cores and 18 Gigabytes of high performance on-chip memory on the WSE.

In AI compute, chip size is profoundly important. Big chips process information more quickly, producing answers in less time. However, exceptional processor performance is necessary but not sufficient to guarantee industry leading AI performance. Innovative, high performance processors, like the WSE, must be combined with dedicated hardware systems and extraordinary software to achieve record-breaking performance. For this reason, every aspect of the Cerebras CS-1 system and the Cerebras software platform was designed for accelerated AI compute.

“The CS-1 is the industry’s fastest AI computer, and because it is easy to install, quick to bring up and integrates with existing AI models in TensorFlow and PyTorch, it delivers value the day it is deployed,” said Andrew Feldman, Founder and Chief Executive Office, Cerebras Systems. “Depending on workload, the CS-1 delivers hundreds or thousands of times the performance of legacy alternatives at one-tenth the power draw and one-tenth the space per unit compute.”

Cerebras is the only company to undertake the ambitious task of building a dedicated system from the ground up. By optimizing every aspect of chip design, system design, and software, the CS-1 delivers unprecedented performance. With the CS-1, AI work that today takes months can now be done in minutes, and work that takes weeks now can be completed in seconds. Not only does the CS-1 radically reduce training time, but also it sets a new bar for latency in inference. For deep neural networks, single image classification can be accomplished in microseconds, thousands of times faster than alternative solutions.

Early customer deployments include Argonne National Laboratory where the CS-1 is being used to accelerate neural networks in pathbreaking cancer studies, to better understand the properties of black holes, and to help understand and treat traumatic brain injuries. The sheer performance of the CS-1 makes it an exceptional solution for the largest and most complex problems in AI.

“The CS-1 is a single system that can deliver more performance than the largest clusters, without the overhead of cluster set up and management,” said Kevin Krewell, Principal Analyst, TIRIAS Research. “By delivering so much compute in a single system, the CS-1 not only can shrink training time but also reduces deployment time. In total, the CS-1 could substantially reduce overall time to answer, which is the key metric for AI research productivity.”

About the CS-1

The CS-1 solution is the fastest AI computer system. It is comprised of three major technical innovations: the CS-1 system, the Cerebras software platform and the WSE.

The CS-1 System

Optimized exclusively for accelerating AI work and built from the ground up by Cerebras Systems, the CS-1 is 15 rack units (26 inches) tall and fits in one-third of a standard data center rack. The CS-1 integrates the Cerebras WSE and feeds its massive 400,000 AI optimized compute cores with 1.2 Terabits per second of data. The combination of the massive Input/Output bandwidth – 12 x 100 Gigabit Ethernet lanes – and the 18 Gigabytes of on-chip memory enable the CS-1 to deliver vastly more calculations per unit time than legacy offerings. Since all of the compute and communication remains on-chip, the CS-1 uses less than one-tenth the power and takes one-tenth the space of alternative solutions.

Unlike clusters of GPUs, which can take weeks or months to set up, require extensive modifications to existing models, consume dozens of data center racks and require complicated proprietary InfiniBand to cluster, the CS-1 takes minutes to set up. Users can simply plug in the standards-based 100 Gigabit Ethernet links to a switch and are ready to start training models at record-breaking speed.

Cerebras Software Platform

The CS-1 is easy to deploy and simple to use. Cerebras’s mission is to accelerate not only time-to-train, but also the end-to-end time it takes for researchers to achieve new insights – from model definition to training to debugging to deployment.

The Cerebras software platform is designed for Machine Learning (ML) researchers to leverage CS-1 performance without changing their existing workflows. Users can define their models for the CS-1 using industry-standard ML frameworks such as TensorFlow and PyTorch. A powerful graph compiler automatically converts these models into optimized executables for the CS-1, and a rich set of tools enables intuitive model debugging and profiling.

The Cerebras software platform is comprised of four primary elements:

- Integration with common ML frameworks like TensorFlow and PyTorch

- Optimized Cerebras Graph Compiler (CGC)

- Flexible library of high-performance kernels and a Kernel API

- Development tools for debug, introspection, and profiling

The Cerebras Graph Compiler

The Cerebras Graph Compiler (CGC) takes as input a user-specified neural network. For maximum workflow familiarity and flexibility, researchers can use both existing ML frameworks and well-structured graph algorithms written in other general-purpose languages, such as C and Python, to program for the CS-1.

CGC begins the translation of a deep learning network into an optimized executable by extracting a static graph representation from the source language and converting it into the Cerebras Linear Algebra Intermediate Representation (CLAIR). As ML frameworks evolve rapidly to keep up with the needs of the field, this consistent input abstraction allows CGC to quickly support new frameworks and features, without changes to the underlying compiler.

Using its knowledge of the unique WSE architecture, CGC then allocates compute and memory resources to each part of the graph and then maps them to the computational array. Finally, a communication path, unique to each network, is configured onto the fabric.

Because of the massive size of the WSE, every layer in the neural network can be placed onto the fabric at once, and run simultaneously in parallel. This approach to whole-model acceleration is unique to the WSE -- no other device has sufficient on-chip memory to hold all layers at once on a single chip, or the enormous high-bandwidth and low-latency communication advantages that are only possible on silicon.

The final result is a CS-1 executable, customized to the unique needs of each neural network, so that all 400,000 compute cores and 18 Gigabytes of on-chip SRAM can be used at maximum utilization towards accelerating the deep learning application.

Development Tools and APIs

CGC’s integrations with popular ML frameworks means that industry-standard tools such as TensorBoard are supported out of the box. In addition, Cerebras provides a fully-featured set of debugging and profiling tools to make deeper introspection and development easy.

For ML practitioners, Cerebras provides a debugging suite that allows visibility into every step of the compilation and training run. This enables visual introspection into details like:

- Validity of the compilation on the fabric

- Latency evaluations across a single kernel versus through the entire program

- Hardware utilization on a per-kernel basis to help identify bottlenecks

For advanced developers interested in deeper flexibility and customization, Cerebras provides a Kernel API and a C/C++ compiler based on LLVM that allows users to program custom kernels for CGC. Combined with extensive hardware documentation, example kernels, and best practices for kernel development, Cerebras provides users with the tools they need to create new kernels for unique research needs.

The WSE

The Cerebras WSE is the largest chip ever made and the industry’s only trillion transistor processor. It contains more cores, with more local memory, and more fabric bandwidth, than any chip in history. This enables fast, flexible computation at lower latency and with less energy. The WSE is 46,255 millimeters square, which is 56 times larger than the largest GPU. In addition, with 400,000 cores, 18 Gigabytes of on-chip SRAM, 9.6 Petabytes/sec of memory bandwidth, and 100 Petabits/sec of interconnect bandwidth, the WSE contains 78 times more compute cores, 3,000 times more high speed, on-chip memory, 10,000 times more memory bandwidth and 33,000 times more fabric bandwidth than its GPU competitors.

For more information on Cerebras Systems and the Cerebras CS-1, please visit www.cerebras.net. Imagery and digital photography for the Cerebras CS-1 can be found linked here.

About Cerebras Systems

Cerebras Systems is a team of pioneering computer architects, computer scientists, deep learning researchers, and engineers of all types. We have come together to build a new class of computer to accelerate artificial intelligence work by three orders of magnitude beyond the current state of the art. The CS-1 is the fastest AI computer in existence. It contains a collection of industry firsts, including the Cerebras Wafer Scale Engine (WSE). The WSE is the largest chip ever built. It contains 1.2 trillion transistors and covers more than 46,225 square millimeters of silicon. The largest graphics processor on the market has 21.1 billion transistors and covers 815 square millimeters. In artificial intelligence work, large chips process information more quickly producing answers in less time. As a result, neural networks that in the past took months to train, can now train in minutes on the Cerebras WSE.

View source version on businesswire.com: https://www.businesswire.com/news/home/20191119005046/en/

Source: Cerebras Systems